Should you be investing in a new engine for your car when you have four flat tires?

Keeping up with the latest technology trends can be a daunting task for businesses. Leaders in IT can be drawn in by attractive vendor sales pitches, but these solutions are sometimes not appropriate for their organization and more often than not, turn out to be a solution looking for a problem. Promises of the potential to give you a competitive advantage and maintain that edge diminish very fast. Searching out the latest tech trends can be a dangerous bet, often stressing an already aged and unsteady infrastructure that is unable to cope with the added pressure.

The term “Putting pearls on a pig” is how we used to say it in North Carolina.

The success of any tech stack rests on its foundation, and companies that try to “get by” with aged equipment risk serious issues like extended outages or lengthy diagnosis processes. The foundational infrastructure that can’t be ignored consists of the core items like servers, networks, and databases as well as the accompanying software frameworks which underpin the entire system. With a weak or outdated foundation, tech stacks may be subject to system failure, data loss, security vulnerabilities and more.

A recent report from Forrester Research uncovered that many organizations have difficulty sustaining a stable tech stack because of persistent budget constraints and making investments in the wrong areas… The survey discovered that 44% of companies experience regular system downtime, whilst 35% are having a hard time providing the necessary resources to maintain their infrastructure. This is creating a great challenge for businesses as these issues can cause considerable detriment to productivity, customer satisfaction, and ultimately profits. You just can’t build a skyscraper on a bed of wet sand.

To avoid the pitfalls of an unstable technology stack, businesses should focus on stability over shiny new features. Maintaining a stable and up-to-date foundation for their technology stack is far more important than constantly chasing the latest technology trends. To keep the tech stack running smoothly, infrastructure and software must be updated and upgraded regularly, system performance must be monitored, and resources must be invested in

There is no doubt that updating and upgrading infrastructure and software can be a complex and time-consuming process, and many businesses have difficulty finding the resources to accomplish this task. In the long run, a stable tech stack far outweighs the short-term costs. At some point the technical debt must be paid. Businesses can ensure that their tech stack is reliable, secure, and scalable by prioritizing stability, which will allow them to grow and succeed.

The Metaverse may be the next frontier for Healthcare

Ever since Facebook changed their name to “Meta” there has been a lot of talk about ‘the Metaverse’. Zuckerberg announced the change clearly to reflect the coming of the next stage of the internet (also known as Web 3.0). However Zuckerberg is far from the leading edge, the metaverse is not a new concept.

What is the Metaverse? The metaverse is a conglomeration of virtual worlds that can be accessed through VR (Virtual Reality) and AR (Augmented Reality) devices. It is touted as the next evolution of the internet in which we get to experience the internet in a tangible and immersive way. The metaverse has already gained traction in gaming and gambling with the rise of VR games and metaverse casinos. The sale of VR headsets like Occulus have been going on since 2016 and other brands even earlier than that. VR and AR are nothing new.

Surely as this technology begins to mature there are more things under the sun than petting your virtual cat or riding a virtual rollercoaster. Let’s think about how we can bring some value to the real human experience. Specifically healthcare. Let’s examine at a high level the relationship between the metaverse & healthcare and the potential roles (both beneficial and detrimental) the metaverse and its associated tech may play in the healthcare sector.

The Beneficial Relationship between the Metaverse and Healthcare

The Metaverse has shown some promise in being a technology that can be easily leveraged for Healthcare. Let’s look at some of the low hanging fruit:

● Telemedicine & Telepresence

This concept is simply defined as the provision of healthcare remotely. Healthcare is already being provided remotely, especially since the dawn of the COVID era when people could not visit their doctor. People had to talk to their doctors through their phones or video calls. Only 43% of medical facilities could provide remote treatments pre-pandemic, compared to 95% as of February 2022. VR is already being used in the treatment of certain phobias by exposing the patient to triggering situations in a virtual environment.

With this rise in the use of technology, the stage is being set to have virtual hospitals in the metaverse that can be accessed through a VR headset. Early versions are hoping to offer physiotherapy and counselling services initially and precipitate greater strides for telemedicine.

● Digital Twinning

This means the creation of a replica of ourselves in a virtual world, down to our internal organs and the state of our health at the moment including integrate past MRIs, CT Scans and X-Rays. This digital twin can then be used to predict the pace of recovery from an illness; the toll age would take on our bodies, etc.

● Storing Medical Records

The metaverse has the potential to change the way we store our medical records. Currently, medical records are stored in a central database, and it usually takes weeks to get access to your records. However blockchain technology, an important component of the metaverse, can offer greater control over how medical data is stored and shared. The records stored on the blockchain will be safer and more secure.

The Double-Edged Sword

The metaverse and VR/AR can be a great boon to the healthcare sector, but is there another shoe waiting to drop? Knowing that it is still new technology, there are uncertainties that would make you wonder if the disadvantages are equal to or outweigh the advantages the metaverse provides for the healthcare sector. There are a few reports of nausea and dizziness associated with the use of VR Headsets, but what other seemingly deleterious effects does the metaverse have?

● Risks of Sustained VR Usage

Aside from nausea and dizziness, VR environments can cause eye strain as a result of low resolution or poor picture quality. Sustained usage with these visual properties may strain the eyes.

There is also the possibility of behavioural transformations. For now, VR does not create an accurate depiction of the real world. There are a lot of artificial stimuli which the brain may not be able to process properly, and this affects the sense of direction and stability of the individual involved. Another risk is physical fatigue, especially if the activities performed in the virtual environment involve physical exertion.

● Data Safety and Privacy

It is no news that Meta, a frontrunner in the creation of the metaverse, has been embroiled in scandals regarding how they handled users’ data on their social media platforms. So it is natural to have concerns about data safety, especially for kids who are keen on technology usage but not nearly careful enough. The nature of the metaverse means that a lot of your info would be out and accessible and shared over insecure channels. Therefore, it is up to the creators of metaverse ecosystems to ensure that our data and that of young people are safe.

Though they have promised to take privacy seriously, we all know that the paramount consideration for them is turning a profit. However, since the metaverse is still in its nascent stage, there is enough time for regulators to erect safeguards that will protect users. Whether that is likely to occur is anyone’s guess. Historically it has been profit before privacy.

● Access Inequality

VR/AR devices are not cheap. Realistically, these devices are out of the price range of those that can barely afford internet access. So, there is the fear that the metaverse may likely worsen the divide caused by inaccessibility to quality healthcare.

● Further Impact on Decreasing Socialization

Currently, most people are always on their mobile phones, and many prefer to interact online than interact in person. Physical human interaction is on the decline, and it could decline further when many people now use and have VR headsets. People may prefer to remain at home and experience the world virtually.

● Impact on Mental Health

On the impact of sustained VR use on children’s mental health, experts have not been able to come up with conclusive theories because of the lack of data. This lack of data is a result of the novelty of VR tech. Most children have not been exposed to VR headsets consistently, and those who have, are not old enough for studies on the long-term mental health effects to be carried out.

However, what we do know is that many kids are tied to their phones 24/7. So what happens when they are donning VR goggles and never leaving the house? Preliminary studies have shown that children may be at the risk of addiction, not being able to differentiate the virtual world from reality, and manifest social disorders.

The Meta Classroom

There is a possibility of getting an education in the metaverse. Imagine a scenario where learning is now done in the metaverse. Students log in with their avatars and sit in a virtual classroom. A lot of schooling is already occurring online with apps like Zoom, Google Classroom, etc. this shows the meta classroom is really not far off especially with the continued fear of more waves of Covid.

The metaverse is finding its way into the health sector and will most likely break into the educational sector. However, with the aforementioned health risks of the metaverse, maybe it’s not something to look forward to?

It is clear that the metaverse has the potential to revolutionize healthcare as we know it, from treatment plans and medical records to patient interactions with medical personnel. However, there are still a few hoops that we need to jump through to get there. Security, privacy, equal access, abuse of the technology and issues related to too much immersion in that technology. Not everyone is keen on getting healthcare via the metaverse, so adoption and acceptance will be the challenge.

A Furlough System, the alternative to Layoffs

By Joseph Venturelli

To get right into the heart of the matter………

What happens when you lay people off?

Each person that you lay off typically gets a severance package, weeks maybe months of pay. Outplacement services, legal expenses may rear their ugly head. In essence an employee laid off today may have a financial impact on the organization for up to six months. Then perhaps you start saving money. If you were looking to have an immediate impact on a current fiscal year, you may experience some benefit in a month or two, but most likely next quarter or the quarter after that.

Then after the layoff how soon do you start rehiring people? Usually 9 months to a year later in earnest, but usually in the next few weeks after the layoffs.

Layoffs are extremely disruptive to organizations, people become instantly distracted. They worry whether there are more coming, whether they are next, they start to examine their options. Morale takes a nose dive. People are consumed for well over a year and with more bad news the cycles starts anew.

Sharing the pain with a furlough system

A furlough system is a way to spread the pain among all staff. Each staff would be required to take a finite number of days off without pay. Once the organization has determined the target savings, a furlough system can be activated that can work one of two ways (or a hybrid of each).

Let’s assume each staff member has to take 2 weeks furlough (10 business days)

The first way you can deploy the plan is that you have to take a week at a time, and a maximum of two weeks by the end of the fiscal year. Let’s call that the “tearing off the band aid method”.

The second way is a furlough currency system. With an online system each employee is allocated 10 furlough chits/script/certificates (1 per day) and they can spend them one at a time, one day at a time, when they have the freedom and flexibility to use them. In an organization with bi-weekly paychecks, there is always two months where staff receive three paychecks. These months would likely be leveraged more often. The value of the furlough chits/script/certificates is that they can also be traded. For example a highly compensated employee who can perhaps survive taking more days a year without pay, can take a furlough day from someone who can’t nearly afford to take the time off. Thus easing some pain and building some esprit de corps.

The Challenge

The system can present its own challenges, unions may not be onboard, the time off may have to be quantified as Leave of Absence so you may have to cut off access. As time goes on, some may be resentful that they can’t live on an abbreviated salary or some may think they wouldn’t be among those who would have been laid off and then begin to resent the sacrifice. What is important to understand however is while this system is in place, staff can make their own choices, if they decide to leave they do so on their own terms and then that attrition (by way of a hiring freeze) can help the bottom line. Everyone feels empowered to make the choices that suit them best, the folks not driven by money alone can render assistance to those that have more pressing financial needs.

Everyone understands the challenge, everyone contributes to the solution, morale may take a small dip vs a nose dive. The press will likely be positive as an innovative way to save jobs and maintain service levels and the organization remains strong and staff won’t be mentally paralyzed.

The key is clear transparent communication with an end game clearly outlined.

The ICSO Process

Reading or Repairing Information Technology before or after a Merger or Acquisition

By Joseph Venturelli

Here I provide an approach to smooth the process of merging two IT organizations when their companies are contemplating or undergoing a merger or acquisition (hereafter called “merger”) The goal is to develop a working model that can be used in either situation. This model can also be deployed when a manager or executive comes into an organization that simply lacks standards and suffers because of it.

Based on work that has been done, and drawing on research written by others, I present an approach called ICSO, which is explained below. The goal of ICSO is to improve efficiencies in IT in order to get the house in order either before or after a merger or just because that needs to be done to drive business value.

As a preview, some actionable items we will highlight include:

- Focus on cultural integration.

- Find executive sponsorship with a steering committee.

- Migrate user provisioning and authentication systems (i.e., the user directory) to a single platform.

- Replace custom-code, where possible.

- Engage outside consultants, who are free from the burden of keeping current operations running, to guide the process.

- Appoint a strong team leader who has the personality to solve interpersonal and political issues.

- Capture metrics to track improvements.

The ICSO Process

I have taken past experience in merging IT operations and coined a term to describe it: ICSO.

Don’t worry: ICSO is not another tedious acronym that you have to spend a lot of time to absorb. It’s just what is called the on-the-ground approach of how to align the task of bringing the IT departments of two different companies together or readying IT at one company for a merger.

ICSO stands for:

- Integration: bringing together different systems into one.

- Consolidation: eliminating redundant systems.

- Standardization: implementing technical standards to help maximize compatibility, interoperability, safety, repeatability, and quality.

- Optimization: modifying a system to make some aspect of it work more efficiently or use fewer resources.

The goal is to build an enterprise class infrastructure to support growth and expansion through acquisition and to take advantage of the impetus for change, coming from the merger, to push that change and others through the organization.

Integration

First, let’s look at integration.

Different consultants often have different views as to what are the best practices for integrating two IT organizations. This is good, as the superset of all their ideas lets one sort through what works best. Here, we will look at several ideas and draw together their key takeaway messages.

The Intersect Group

The Intersect Group addresses integration in their document “Best Practices in Merger Integration.”

Here are some key points.

- Establish a strategic framework for decision making.

- Dedicate integration resources.

- Frequently assess cultural progress.

- Communicate early and often to measure hoped-for performance improvement versus realized performance improvement.

- Objectively and systematically identify the highest priority synergies (candidates for savings).

- Offer incentives to key people to stay on if there is an indication that the best people want to head for the door.

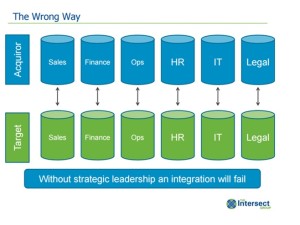

Here is a diagram from Intersect illustrating that the silo approach of yesteryear which is not the best way to move forward:

“The Wrong Approach,“ as you can see is department-by-department. That cannot work, as a departmental manager, by definition, lacks the authority to make enterprise changes. Instead a steering committee with executives on board should be brought together to ensure cross-departmental communications and to solve cross-departmental issues.

This leads us to what Intersect glowingly calls “The Right Approach:”

As you can see, the alignment here is across processes and not the departments. For example, product development has to be plugged into changes in IT related to the products they are developing. There is usually no one department called “product development,” unless it’s maybe a pharmaceutical business. As another example, “Operational Initiatives” can become drivers and guidelines for those changes. And so forth.

Cultural Considerations

The Intersect Group makes the rather shocking conclusion that “80% of integration problems relate to culture issues.” We discuss that further below.

Imagine integrating two companies from two different countries with different languages and laws. That would be a “cultural integration problem” in the Merriam Webster definition of culture. But “culture” here means the way that the two organizations operate. That includes what the process for escalating issues is, how the companies reward their employees, and everything from their dress code to whether or not employees have to pay for their own coffee.

McKinsey

The mighty McKinsey firm weighs in with their learned observations on how to find and create value in IT.

In a paper “Understanding the strategic value of IT in M&A” the author pens this phrase: “We’ve all heard about deals where the stars seemed aligned but synergies remained elusive.”

The author goes onto explain onto to say that:

“In these cases, the acquirer and target may have had complementary strategies and finances, but the integration of technology and operations often proved difficult, usually because it didn’t receive adequate consideration during due diligence.”

Ouch. The strategic planners have just been being chastised for not doing due diligence.

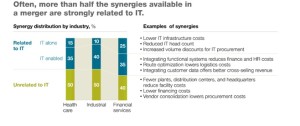

McKinsey says that 50% to 60% of the synergies (i.e., cost savings that should arise from the merger) are “strongly related to IT.” So it is rather reckless if IT issues are not fully discussed in the talks leading up to that.

Here are the steps McKinsey cites to drill into and flush out those IT-related issues and opportunities:

- The merger specialists need to interview employees who actually work with the systems and not just the CIO or management. This would let them find out, for example, that it would take much more effort to integrate the supply chain systems than hoped for. Or they might found out that the warehouse management system is riddled with problems and the vendors cannot fix those. Such interviews provides the merger specialist the chance to find out which systems don’t work well or whose architecture is too rigid to adapt to the merger and future changes in markets and product mix and the acquisition of additional companies down the road.

- These discussions lead to the decisions as to what systems to integrate, which to maintain as they are, and which should be consolidated. McKinsey suggests making these decisions quickly, but not in haste, to avoid a prolonged debate.

The importance of getting all of this correct is driven home in this graphic:

Graphic source: McKinsey

Here we see what one hopes to see when merging with another company: lower costs. More than half of that comes from IT.

The McKinsey paper is written at a high level for the strategic planner, but among the specific items they cite that IT staff can relate to are:

- Use SOA. The service oriented architecture is the most flexible since it by definition has loosely coupled interfaces. Transactions are not popped off the JMS queue as with a JNDI model, but HTTP is used to retrieve transactions as JSON objects using REST. That avoids the older way of using XML and SOAP which required some network changes. The internet requires no network changes. In other words, SOA using REST is not as complicated and is reusable. Plus it also lets a company more easily write mobile applications for existing web application, since the business logic is in the back-end application, as it is or should be with the web page.

- Some of what McKinsey says might be dated depending on what is the status of a company’s ERP system. Their paper was written in 2011, which is a long time ago for IT. McKinsey says that synergies are realized when a company uses one over overarching system to run the whole business, i.e., the ERP system. But Silicon Valley startups argue that a mix of SaaS components added onto some piece of the ERP system works best. In other words, SAP does ABC best, but other applications do DEF and GHI better and cheaper.

Finally, McKinsey says that having a well-tuned IT organization can make a company a more attractive takeover target plus a company whose board of directors can command a higher price for their stockholders.

The opposite side of that statement is, “CEOs and CFOs should be wary of embarking on an M&A growth strategy that will require a lot of back-end integration if their corporate IT architectures are still fragmented: the risk of failure is too high.”

Consolidation

To achieve the realized cost savings from merging two operations requires collapsing different systems into one. Consolidation is different than integration. Integration means connecting systems whereas consolidation means shutting one down and then working on the necessary data conversion and user training to get everyone moved onto the new platform.

A consolidation is where cultural issues in IT can become an impediment. Consolidation means that one department is going to feel threatened when the system they have been supporting for years is targeted for replacement. And then there are personalities. Lots of people do not adapt well to change, others embrace change, and then these are those with vested interests whose job is tied to running their fiefdom. This is where executive involvement and a strong team leader can keep the momentum moving forward. Employee incentives can also help.

Let’s take a look at one case study where both cultural and technical issues were a problem and how that situation turned out.

Case Study: Two Cellular Carriers

Here we describe a situation with two cellular companies that merged. We will call them Company X and Company Y.

There was a bad omen hanging over this merger at the onset. Their most basic asset, the cellular network, worked completely differently. Company X used the CDMA cellular standard while Company Y, with their walky-talky ability, used IDEN. Neither used GSM, which is the main standard used around the world. So the basic systems apart from IT could not be more different.

Beyond that their cultural was one company of grey haired managers running everything as they had done for decades versus a startup culture with lots of kids running around on skateboards, i.e., figuratively.

As to the IT integration and migration. That did not turn out well. Someone made the ill-fated decision to integrate key sales and user provisioning systems.

The newly merged companies, having decided to integrate provisioning, was using both the Microsoft Identity Information Systems and Oracle Xellerate to onboard employees, contractors, store employees, and users at Walmart and other retailers where X and Y phones were sold. The approach taken was to create interfaces between the user provisioning systems so that users provisioned in one system would be provisioned at the other company’s equivalent.

For example, there were two systems needed to sell phones, one for each company. So an employee at each store needed access to both. So users provisioned in Xellerate needed to be pushed to the MIIS System of the other company which would then provision them to that company’s cellphone sales system.

The systems basically fell apart because of load and because of software errors. There were long delays in provisioning employees. Support was so overwhelmed they simply quit reading all their email and responding to tickets. Directors reached over the head of managers to work directly with the staff to firefight problems. Stores that had been newly opened had sales clerks standing idle, unable to sell phones because of days-long backlogs in the Microsoft system that could not handle the volume of data. The Xellerate product at that time did not work well either. It crashed multiple times per day. That situation persisted for about a year.

In hindsight, the company in that case should have abandoned both systems and used something better.

Standardization

Open source software for programming and powering hardware has become so prevalent that it has educated people on the importance of standards. Google, Yahoo, Amazon, and Netflix all have turned over key portions of their code to Apache where an army of developers from different companies and single individuals can contribute to that. Ubuntu, which powers most Linux systems, Hadoop for big data, OpenStack VM operating system, and even some antivirus databases are delivered in this way.

Standardization also applies to processes, where it means using the same process across different departments. Finding those is tasked to the business process consultant working with IT.

Finding the Right Balance at Nestle

CIO magazine says that there is a balance between enforcing standards across the whole of the business and letting local organizations vary from rigid rules that do not work in all cases. They point to Nestle as one company that has found the right balance.

One Nestle executive laid out the problem of disparate systems when he made the rather funny observation that, “We had 10 definitions of ‘sugar’ in Switzerland alone.” He went on to say, “There was very little global standardization of data, systems, or processes.”

Nestle took the difficult approach to enforcing standards, which was to adopt SAP, which is a heavy lift for any company. But doing that led to developing standards. Some of that was because they needed to change processes to fit into the software. Other changes came from taking advantage of changes forced by the project to reengineer processes not directly related to SAP.

As the company moved from 30% to 80% of the company using standardized procedures, this had the added benefit of making it easier to acquire new companies. Nestle backed off the goal of 95% for standardization when they realized that Swiss efficiency did not fit all situations and subsidiaries.

Among recommendations coming from CIO:

- Start with back office operations first.

- Pick good candidates for pilot tests.

- Get top management support.

- Build strong governance.

Past experiences have shown that if you are not familiar with the culture of the organization, you may find yourself struggling with the definition of the most simple of words like “done” and “everywhere”

Optimization

Optimization means modifying a system to make some aspect of it work more efficiently or use fewer resources. Consultants like to say that, “Optimization drives business value.”

Optimization can apply to systems in different ways:

- Project management.

- Better integration between systems to reduce duplication and manual processes.

- Better utilization of computing resources–like moving networking, storage, and virtual machines to cloud vendors–to drive down cost per unit.

- Using SaaS components to lower costs yet obtain best-in-breed solutions.

- Training developers on new tools.

- Using Analytics.

- Taking inventory to see what can be cut.

- Paying close attention to security and cybersecurity as errors there run up costs and could lead to a potential disaster.

Project Management

There are a host of new project management techniques that speed the development of software and deliver results earlier in the project lifecycle. Among these are Agile and Scrum and lots of other variations thereof. In addition to setting the focus on the most important items, these also push responsibility for testing onto the developers, thus gaining efficiencies with the testing effort by replacing the separate QA team with a smaller one integrated into the project team. Tests are also written as English-language programs before actual coding starts, further setting the focus on making the system work without spending time coding items not absolutely necessary.

Integration with Other Systems

Systems can be made better when manual processes are pushed onto the computer.

Better Utilization of Computing Resources

It may not not make financial sense anymore to buy your own storage array for archival when you can use Amazon Glacier for pennies per GB to do that. And it may not make financial sense to make the capital expenditure to buy PCs to fill the racks in your own data center when you can rent virtual machines from cloud vendors. Even DNS and failover can be pushed out to cloud vendors.

Using SaaS

You should not write your own shipping and receiving module when you can tap in UPS or FedEx logistics.

Train Developers on new Tools

Just when developers have learned Hadoop MapReduce, Apache Spark threatens to make it irrelevant. The IT landscape is shifting constantly so skills need to adapt. There is no reason to write all your interfaces and new applications using Java, which is now 30 years old, when there are so many new tools that make programming quicker and less subject to errors. One example is to use Node.JS for front-end programming. It is an extension of JavaScript that makes creating web pages much easier than Java. Plus it addresses latency issues when one page handles multiple steps instead of transmitting lots of pages back and forth. Programmers should be free to use Ruby or Python or whatever gets the task done easier. Plus it makes the developers happy to branch out. Happy people do good work. If this sounds like it runs counter to the ideas of standards, it does not. In this case the programmers are using international and not company standards.

It is not usually necessary to send developer to training, if you have good developers. All you need to do is give them down time to watch tutorials and they will teach themselves.

Analytics

Analytics is the application of mathematics and statistics to business problems. Analytics includes the more widely understood techniques of linear programming (optimization) and anomaly detection.

Analytics is widely used in application performance monitoring. But it can also be used to measure the mean-time-to-close tickets and service level agreements. In order to measure progress with IT initiatives it is necessary to track such metrics. Analytics also avoids turning lose resources to run down problems or issues that are not statistically important.

Taking Inventory

Datatrends mentions the common sense idea of taking an inventory of computing assets to see where there is more than is needed. One target is software licensing. Some products and cloud services are sold on a number-of-records, number-of-transactions, or number-of-users basis. There is the potential for waste there. Take the case of databases. Old, stale records are not always purged from the system because it is difficult to do so due to the need to maintain referential integrity of just the difficulty of running that type of mini conversion. So the customer is paying to carry data records that have gone stale. That could be a good time to renegotiate with the vendor.

Summary

Getting ready for a merger or reacting to one lets a company focus on Integration, Consolidation, Standardization, and Optimization. Having IT discuss their issues and involving them in the pre-merger studies warns strategic planners where they could be difficulties. Having gone ahead with the merger it is important which systems can be consolidated and which should be shut down. Any kind of change like this needs to be driven at the executive level and with outside management consultants who are freed from daily operations so have time to focus on integration. Standards should be imposed across the organization on both systems and processes. Leveraging the cloud is important by using SaaS components when possible. All of this adds business value to the enterprise in both cost savings and negotiating the merger itself.

About the Author

Joseph Venturelli earned his degree in design from the School of Visual Arts in New York City. His debut into Information Technology in healthcare began as a system administrator, concentrating on the technical oversight of information systems at Presbyterian Hospital in Charlotte, North Carolina in 1990. Early on Joseph trained and became certified as a system engineer and certified trainer. He has been responsible for leading teams of technologists including infrastructure, web services, data center operations, call centers, disaster recovery planning and help desk services, and has managed scores of system implementations over the past twenty five years..

Joseph co-led the creation and implementation of an electronic medical record system, which included full financial, transcription and scheduling integration. Physicians were given office access to the scheduling and clinical documentation platforms. This integration strategy created immediate medical record completion, eliminating the need for back end inspection and rework resources. Joseph has consistently delivered efficiencies through standardization, recruiting and retaining key talent and launching enthusiastic customer service programs for a variety of professionals, patients and vendors.

Joseph has been published in numerous technical journals and industry magazines and has authored several books including one on patient advocacy. A seasoned executive, Joseph has worked as the Chief Executive Officer for a Southeast Consulting firm, a Chief Information Officer for an Ambulatory Surgery Center company as well as the Chief Information Officer for a Midwest county hospital system and as the Chief Technology Officer for a New England Hospital System.

What happened to Critical Thinking Abilities?

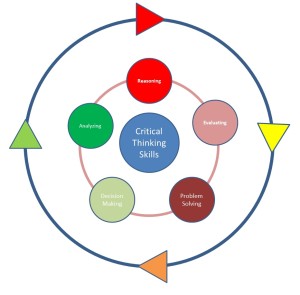

Information technology has and is simplifying the work we do. The way we collect data, analyze it and communicate it is becoming easier every day. Social media such as Facebook, Twitter and other sites have facilitated communication by overcoming distance as a barrier to information conveyance. Therefore, it is easier to gather ideas and views from many people in all corners of the world, the new global village. An individual in the western part of the world is able to get real-time information from another one in the eastern part. The Internet being an essential infrastructure through which the two sides interact. So let’s discuss the impact of technology on the upcoming generation about how they think and the adverse effects of technology on critical thinking, reasoning and decision making of people in this era.

Social media in general is impacting how Millennials and Gen Y’ers are communicating. Over time new social media sites emerge. People in their teens and twenties spend much of their time online seeking solutions to their problems. Therefore, they lose touch with basic one on one communication. Consultation is done through the internet involving the friends one has online. Overreliance on search engines and social media for solutions is occupying the position that critical thinking formerly possessed. A person having a particular challenge is comfortable seeking the solution through Facebook, Twitter, Reddit and other common sites which combine the thoughts of others to a solution that they present as their own. Acquiring those ideas/opinions from people is deleterious to their very own critical thinking ability. Thinking is important because it connects feeling with what one hears. In this generation, conversations on social media use the “emoji” (Japanese for “picture word”) as a representation of feelings. While cute, it is highly unlikely that these small icons provide an effective representation of human feelings and their continued use is leading to the disconnect between thoughts and feelings, such as worry, happiness, anger and even laughter. They are not capable of showing our sentiments to the fullest. Instead, they are just a poor representation of emotion.

In these upcoming generations, recalling relevant information and using critical thinking skills is becoming less apparent. Search engines such as Google have simplified how we search for information. All one needs to have is a few keywords and the results appear in seconds. The need to draw from “learned information” is virtually nil with so many databases from which to draw upon. Therefore the capacity to remember important details declines with improvement in the information technology and therefore facilitates a generation of researchers and not thinkers. It should come as no surprise that when pressed to speak publicly a millennial may be found to lack the ability to express his/her thoughts when addressing an audience. The reason likely is the inability to connect one data point to the next in a stream of consciousness without the crutch of a search engine or online resource. The basic inability to understand the relevance of the bigger picture and string a set of thoughts together.

If you were to get a millennial in a room with no smartphone, computer or wi-fi and ask them “If you travel by train from New York to Boston how many railroad ties will you pass over on your trip?” you may find that you won’t likely get an answer because they lack the critical thinking skills, or the mere memorization of facts to even hazard a guess. Yet give them a computer and let them “Google It” they may come up with an answer based on information someone else says is true. Information acquisition from the search engines does not mean that the finding is accurate or precise, but just providing what is widely acceptable as a “good enough answer”. Websites like Snopes.com created an entire industry around dispelling the ridiculous and sometime believable snippets of data that pop up on social media. To this day my 21 year old daughter still believes that September 11th (9/11) was a government cover up because of what she read on the Internet.

Knowledge provides a ground work for critical thinking and critiquing the findings of other people and not just blindly accepting their claims at face value. In this digital generation, knowledge and wisdom are rare because everyone want to have the shortcut that would lead to his or her problem being solved. Personal opinions do not count anymore as compared to what other people say or think. Developing analysis of a challenge is facing the problem of impatience and lack of self-esteem. Time is becoming a very scarce resource in that reading a 500-page book is becoming impossible because many people get preoccupied with other online activities. A potential millennial reader would rather read a book review on the internet rather than read the actual book. Moreover, because thinking encompasses creating an image in our mind, they end up missing on a specific segment of the book. Take for example a non-fiction book, the key to really appreciating it is the times when you stop reading and digest what you have just read and try and understand what information was trying to be conveyed or what the main character was trying to accomplish.

A very apropos acronym used online more often these days is “tl;dr” which is a shorthand notation added by a reader during an online discussion indicating a passage appeared to be too long to invest the time to digest. (too long, didn’t read) now when you take a step back and realize that you see this in response to maybe a 150 word message, it is indicative of the fact that the upcoming generations not only lacks critical thinking skills, they also lack patience or interest in participating in a discussion that requires them to read anything longer than a “tweet”

Moral erosion can be another unintended outcome. Thinking involves asking whether something is good or evil or capable of causing harm to us. This part of thinking is important and of value to humanity. This aspect seems absent in the minds of “20 somethings. Consequently, most people are engaging themselves in activities they see others do without assessing the outcomes. They also seem to lack the capabilities to think ahead several steps. “If I do this, then this could happen, which will result in this……” An individual would rather experiment than referring to similar happenings in the past. At the end one regrets the things they did or did not do.

Technology is essential to the human being but misusing it becomes deleterious to us and the way we think. Spending too much time surfing the net is discouraging the desire to analyze and process data. An example is an inability to do simple calculations that do not need computation, perhaps not taking out your phone to calculate a 15% tip on a dinner bill? If this trend continues, decision making and critical thinking will become a thing of the past and those that chose to engage and master it will be the outliers and potentially the leaders of the future.

About the Author

Joseph Venturelli earned his degree in design from the School of Visual Arts in New York City. His debut into Information Technology in healthcare began as a system administrator, concentrating on the technical oversight of information systems at Presbyterian Hospital in Charlotte, North Carolina in 1990. Early on Joseph trained and became certified as a system engineer and certified trainer. He has been responsible for leading teams of technologists including infrastructure, web services, data center operations, call centers, disaster recovery planning and help desk services, and has managed scores of system implementations over the past twenty five years..

Joseph co-led the creation and implementation of an electronic medical record system, which included full financial, transcription and scheduling integration. Physicians were given office access to the scheduling and clinical documentation platforms. This integration strategy created immediate medical record completion, eliminating the need for back end inspection and rework resources. Joseph has consistently delivered efficiencies through standardization, recruiting and retaining key talent and launching enthusiastic customer service programs for a variety of professionals, patients and vendors.

Joseph has been published in numerous technical journals and industry magazines and has authored several books including one on patient advocacy. A seasoned executive, Joseph has worked as the Chief Executive Officer for a Southeast Consulting firm, a Chief Information Officer for an Ambulatory Surgery Center company as well as the Chief Information Officer for a Midwest county hospital system and as the Chief Technology Officer for a New England Hospital System.

Will Cerner’s award of the Department of Defense contract ultimately result in a win for Epic?

By Joseph Venturelli

Just about a week ago the United States Department of Defense decided to award Cerner a potential eighteen year EHR deployment engagement deal that could payout $11bn

Cerner’s EHR suite will replace the Department of Defense’s current system in its 55 hospitals and 350+ clinics, as well as onboard ships, submarines and other locales in the military operations theater.

So why may this be a good thing for Epic and why may Judy Faulkner, being the brilliant, cunning business person that she is, have let this deal get away?

Well let’s look at some of the data. Epic has been named the #1 software suites by KLAS every year since 2010. Epic has about 315 customers which are comprised of 69% of the Stage 7 hospitals in the United States, 83% of the Stage 7 Clinics in the United States and 71 children’s hospitals in the United States.

Epic is innovating on a weekly basis with updates and new functionality coming out at a breakneck speed. They are firmly entrenched in Kaiser Permanente, Cedars Sinai, the Cleveland Clinic, Mount Sinai and Yale New Haven.

So let’s quickly look at the players who bid the deal:

First we had CSC with HP and Allscripts their ambulatory and Acute don’t really interoperate well together and Eclipsys hasn’t moved much since their acquisition in 2010, so they were a likely “non-starter”

Then we had CACI with IBM and EPIC. What is there to say about Epic and IBM, my guess is this would be the team to beat. Epic and IBM both have very regimented implementation strategies, one may even say they are “military” in their way of doing things? A structure you would have thought the DoD would have appreciated, but also keep in mind Epic’s lack of flexibility. Remember also the contract required that you integrate in 30% of small businesses; this may have also been a tough pill for Judy to swallow. Either way my money would have been on this team.

Then we have the Cerner / Leidos / Accenture trio – Leidos being the incumbent, and then bringing on the powerhouse Accenture, who has a ton of healthcare experience, really stacked the deck for them. For me it just seems like the most comfortable choice for the DoD to make, basically “the devil you know”

Maybe Epic customers came out the winner here when you hear things like this from Micky Tripathi, CEO of the Massachusetts eHealth Collaborative when he said “My biggest worry isn’t that Cerner won’t deliver, it’s that DOD will suck the lifeblood out of the company by running its management ragged with endless overhead and dulling the innovative edge of its development teams. There is a tremendous amount of innovation going on in health IT right now. We need a well-performing Cerner in the private sector to keep pushing the innovation frontier. It’s not a coincidence that defense contractors don’t compete well in the private sector, and companies who do both shield their commercial business from their defense business to protect the former from the latter.”

I challenge you to think about the sheer drag that this contract will put on Cerner and how it has the potential to slow the company down in so many ways. Ways that may not become apparent for 18 to 24 months, but once implementation and training start getting on the schedule Cerner may find itself bogged down in the bureaucratic mud.

However in their call last week Cerner President Zaine Burke assured their investor base that “they were fully prepared to meet their staffing requirements of the project.”

When asked about the potential drag on its current customer base, Burke said “We believe this is a positive development for our clients, and they should have confidence that Cerner will continue to execute to meet all of our current and future commitments, he said.”We do not expect this to have a material impact on bookings, revenue, or earnings in the near term since the project will have several phases and will start with a small initial rollout.”

Is there a better way to cripple your competitor than to see them awarded a potentially 18 yearlong contract that will likely not allow them to concentrate on much else while you (Epic) continue to pick off the remaining portion of the U.S., keep innovating and rolling out neat new stuff while Cerner is working on staffing up and creating the project plan?

When Frank Kendall, Undersecretary of Defense for Acquisition, Technology and Logistics, announced the award, he said that the decision was based in a large part “to limit changes in the current electronic health records system”

And that “Market share was not a consideration,” “We wanted minimum modifications.

Kendall went on to say “The trick … in getting a business system fielded isn’t about the product you’re buying, it’s about the training, the preparation of your people, it’s about minimizing the changes to the software that you’re buying,”

Which leads one to wonder that if it isn’t about the quality of the product, or the fact that the market has widely adopted the product you didn’t pick, then just how would you deploy a piece of software over an 18 year engagement while minimizing the changes? Something has to give.

The DoD also said that Cerner’s EHR will be “an important enabler of sustaining care anywhere people might serve, a vital piece of care coordination,” that will reside in more than 1,000 sites around the globe and that means the commercial software must interoperate not only with the VA but also with the private sector healthcare entities that conduct as much as 70 percent of service-member’s care.”

By the time the DoD gets this all rolled out in 2030 Epic will likely have most of the private sector here and abroad locked up

.

Cris Ross, Mayo Clinic Chief Information Officer, explained to Healthcare IT News that the reason Mayo clinic is switching from Cerner to Epic was the need to converge its practices in which case Epic was a better option because of its reliability in terms of revenue cycle and patient engagement.

He added that, Epic’s ability to connect through direct protocol and other EHRs, the volume floating across networks from Epic to other EHRs was impressive.

What equal opportunity do we supposed Epic will have? While Cerner will be delivering project plans, Epic will continue strengthening an integrated EHR across organizations, starting with Mayo whose practice of convergence is a core mission thereby strongly supporting a long-term objective for them. Unlike Cerner’s contract, Mayo’s deal is a long-term relationship based on technological commitment, which will create a foundation for Epic’s innovative strategy.

Can the DOD make a breakthrough in the 21st century using technology from the 20th century? To achieve an advanced healthcare system, an open, internet based platform committed to building a national health information network is paramount.

As Sun Tzu put it in The Art of War, “The greatest victory is that which requires no battle”. So the question remains, did Epic lose the deal to Cerner or did Epic let Cerner have the deal knowing that ultimately the deal would begin the death spiral for Epic’s largest competitor or should I just get fitted for a tin foil hat?

* Sept 2019 (4 years hence) only 4 locations in Idaho and California are live on Cerner.

** June 2022 the DoD gets to the half way point, while the VA struggles.

*** Fast forward 8 years to April of 2023 and the Veterans Administration announced that it would put the $16B EHR rollout on an indefinite pause until Cerner/Oracle can address the very serious issues affecting the VA.

July 2023:

Costs for the Veterans Affairs Department’s Cerner EHR rollout continue to soar, causing tension between the VA and Congress, with some lawmakers wanting to ax the program, while others advocate for the VA to take one more shot at the implementation, Politico reported July 21.

In 2018, the VA’s EHR costs were initially projected to be $10 billion over 10 years but have grown to $50.8 billion over 28 years. These upticks have caused some lawmakers to want to shut down the project.

There have been several bills introduced to end the program as issues with the EHR system continue. According to the publication, the VA’s Cerner EHR system led to the deaths of four patients, and it has suffered outages at facilities that have implemented it.

“It has been a nightmare,” House Veterans’ Affairs Chair Mike Bost, R-Ill., told the publication.

More than a dozen officials who have been involved or are intimately familiar with the EHR project told Politico that “the system’s problems are myriad.”

“The program was never designed to be successful,” Peter Levin, a former VA chief technology officer, told the publication. “Not making difficult choices and not making good choices is costing, at the very least, taxpayers billions of dollars.”

Managing Technologists, Geeks and Nerds

By Joseph Venturelli (self-proclaimed geek)

Bill seldom makes it home before 2am in the morning and when he does, it’s simply to change into different clothes and freshen up. He does not eat a proper meal or take some good sleep but he will not hesitate to fall asleep on the couch and leave the computer on. Being the IT resident genius at a healthcare company, he is the best at what he does. Most of the time he wishes he could get more comfortable and fall asleep, but with the unending projects and amount of pressure from his management team, he can barely create time to even tidy up his apartment. The off duty time he gets in between shifts are usually consumed in consulting and doing some extra research for a new project. Bill does not really have a relationship with his colleagues but he is well aware that they have many perceptions towards him. His poor personal care is obviously because of his job and his management. Not that he takes his job too seriously; he is just oblivious of the other world and has to deliver in this one.

Geek is a term used to refer to individuals with extreme intellectual pursuit in a particular field of complexity. A nerd is a descriptive term for person overly intellectual, obsessive but lacking social skills. These persons exclude mainstream activities to spend overt amounts of time on obscure activities relating to topics of fiction or fantasy. Geeks and nerds are used as synonyms but most people argue that they slightly differ in meaning.

Geeks are more proactive and involve practical activities in the technical fields they study and are consulted for expertise and skills. Nerds, on the other hand, tend to be slow and passive; spending most time studying and doing research in literal works.

These individuals constitute the technology as well as computer experts, and sometimes engineers, and are generally referred to as technologists.

The first step in managing geeks and nerds requires the understanding of the nature of these individuals and creating an environment that they are able to fit into. Insight into their minds, motivations, beliefs, and behaviors is important in developing a significant influence in their pursuits. Their nature revolves around thinking, acting and moving in a unique perspective that is technology, business technology management, research scientist, professor, general manager, entrepreneur, director of corporate training, and author where the breadth of knowledge and experience resonates with their abilities to perform. With this understanding you’re able to develop a model of how technology focused knowledge works and its ability to add value to an organization’s objectives.

Technologists display a number of behavioral traits that inhibit not only advancement up the technological ladder, but also smooth relations with non-technical colleagues and clients. This ascribes to the fact that they develop a mechanism to move around the world based on the perception on technology and machines. The start of work in any organization requires interaction with people and their feelings, but after some time technologists are not able to respond to these behaviors and this leads to a pitfall, where the organization seeks technicians that are more professional. Understanding the perspective of the world of engineers and technologists we can enhance their communicative abilities to deal with issues and relate better with other people. This can be achieved by management policies with prescriptive approaches for managing, motivating and understanding that technological work is not affected by influence and authority, but by the productivity of the technologists. Team motivation is another integral part in dealing with the competitiveness of workers in the technological field because applying management techniques in technical fields works best in environments where technologists have interpersonal relationships and can make consultations based on proximity and not command.

Despite the fundamental importance of technologists around the globe today, most of them work under conditions of extreme pressure and frustrating job environments. This results in burnout because of physical and emotional exhaustion. The nature of these jobs demands intense attention and long hours that leave most individuals drained and stressed. Keeping technologists happy is an absolute requirement for any organization or modern business; hence managers should avoid inhibitors to technologists’ productivity. Stress in the work environment, especially criticism from management, can be a depressant, and too much work can end up straining the physical and mental health of a technical worker. Management may come up with strategies that counter these challenges; change of routine whereby frequent long and short shifts can interplay, creating programs that encourage colleague relations in the work environment, placing limits on how many things one worker is handling at a go, encouraging diversification in the worker’s routine.

Technologists appreciate respect, not because they have some level of intellectual expertise, but because it is a professional courtesy. This respect is mostly required of the management due to the demeanor of authority that larks within the work environment. For most technologists, respect develops based upon the tolerability of a person, which includes the practicality of the suggestions they make. It is easier for them to work and self-organize with people of independence and social chart.

Capacity for technical reasoning trumps only on right decisions. Making mistakes only adds to the workload and creates more tense relationships between the management and the technologists. The manager, however, must never ignore the technologist despite their different fields of specialization, opinion or ideas. Technologists require good leadership just like all other personnel groups. A technologist’s creativity is another trait that a manager can use to his advantage. When technologists are able to present their ideas and use them, it lessens the need for consultation on the procedures and methodologies that work for both sides. It is, however, important that management consult the technologists when making decisions that directly affect, or relate to their creativity and programming.

Technologists may be experts and intellectuals in the field of technology, however, even experts require a bit of direction and orientation when they join new organizations.

Training is very important for the success of technologists because it motivates the intellectual aspect to adapt to the organization’s principles, procedures and routine.

Other skills also enable the technologists to revise old and new knowledge to a working advantage. A technologist with all the tools needed to work is a happy technologist. It is quite easy to work when all the required equipment is available. This enhances efficiency and saves time. It is the manager’s responsibility to ensure the provision of work equipment is sufficient, with fully functional tools for instance; an effective computer system with reliable internet connection, an office phone connection, sufficient detailed information and instructions on what to do, and caffeine. Technologists work best with caffeine beverages.

Technologists are not perfect; they tend to over engineer systems in an attempt to build architecture to accommodate for future capacity. Sometimes this is necessary but often all that is needed is a quick nudge, which is why managers need to be aware of such possibilities while assessing the immediate procedures that focus the technologist’s attention to what’s required in the present. They can also avoid such situations by giving an outline of the scope of work to be covered so that anything outside of that, of interest to the engineer, is achieved later.

Most technologists develop instantaneous ideas in the course of duty, which could include formulas or patterns to a particular course. Such ideas may contradict the set procedures for a particular assignment. Managers are, however, encouraged to adjust the formal arrangement of strategies in cases when the period for work is open. This motivates the technologists to work with ideas they are familiar with, which they can adjust when necessary. Managers should also learn to avoid superficiality in technological aspects. It inhibits the worker’s ability to work with confidence because they are likely to feel undermined and unnecessarily pressured. For example, a constant reminder from a manager about the deadline of a particular assignment could cause a technologist to feel de-motivated rather than enthusiastic about finishing the assignment.

To conclude, technicians and technologists represent an important aspect of the globe today; technology, and therefore help define some of the most significant inputs in making other industries successful. To enhance the continuity of this field of expertise, the educational curriculum needs to affect the studies of individuals to the level of management so that geeks and nerds can relate to other aspects of work other than machines and tools. Management, on the other hand, should prioritize issues of their technical direct reports through creating beneficial relationships with the technical workers. Fostering effective communication skills for engineers and technologists that can improve their interactive abilities with other colleagues and customers and practicing effective leadership for managers in top positions. This is one way to enhance the work environment and team effort. Learning methodologies to apply from IT practices in projects and concepts that all workers are comfortable with and finally, appreciating the efforts of technologists by providing working conditions that enhance their productivity.

Management in any organization or modern business should know that, happy geeks are productive geeks.

About the Author

Joseph Venturelli earned his degree in design from the School of Visual Arts in New York City. His debut in healthcare Information Technology began as a system administrator, concentrating on the technical oversight of information systems at Presbyterian Hospital in Charlotte, North Carolina in 1990. Early on Joseph trained and became certified as a system engineer and certified trainer. He has been responsible for leading teams of technologists supporting infrastructure, web services, data center operations, call centers, disaster recovery planning and help desk services, and has managed scores of system implementations over the past twenty five years.

Joseph co-led the creation and implementation of an electronic medical record system, which included full financial, transcription and scheduling integration. Physicians were given office access to the scheduling and clinical documentation platforms. This integration strategy created immediate medical record completion, eliminating the need for back end inspection and rework resources. Joseph has consistently delivered efficiencies through standardization, recruiting and retaining key talent and launching enthusiastic customer service programs for a variety of professionals, patients and vendors.

Joseph has been published in numerous technical journals and industry magazines and has authored several books, one notably addressing patient advocacy. A seasoned executive, Joseph has worked as the Chief Executive Officer for a Southeast consulting firm, a Chief Information Officer for an ambulatory surgery center, as well as Chief Information Officer for a Midwest county hospital system and Chief Technology Officer for a New England Hospital System.

The Importance of Information Technology Standardization

The Importance of Information Technology Standardization

By Joseph Venturelli

Introduction

Information technology is integral and vital in every business plan. It plays a key role to ensure maintenance and expansion of an organization’s objectives and strategies. It facilitates communication between an organization, inventory management, information systems management, customer relationship management and competitiveness enhancement through product quality. Multinational corporations deal with vast amounts of data thus IT plays an important role in data management. Therefore, the use of standardized information technology in large corporations has various benefits to individual companies, individuals, and users.

Information technology standardization offers a set of powerful business tools for large corporations The tools facilitate fine-tuning of risk management and business performance through more sufficient and sustainable means of operation. The businesses can demonstrate the quality of customer service and boosts embedment of best practices within organizations. Standardization promotes a culture of continued performance within an organization. It establishes scales and increases capacity for efficient task completion. IT standards enable an organization to develop services, products and development.

Secondly, it decentralizes the decision-making process since the executive, and all critical users contribute necessary input with an aim of bettering the corporation performance. Communication and information flow are enhanced among the employees, customers, vendors, corporation’s executives and IT regulating bodies. Involvement of the necessary components increases standard implementation making it highly cost-effective. Consequently, companies realize better returns since the involved parties are aware of what is expected of them, and there are measures to assist them conduct their duties accordingly. The parties involved, support IT maintenance in an organization leading to minimal group focus.

IT standardization in organizations expands an organization’s vision and focus. It determines the technology to be implemented in a corporation by guiding the industry, consumers, users and administration so as to derive optimum benefits from the standards. All the relevant aspects of the industry such as technical and essential characteristics of the corporation environment are thoroughly explored to ensure the rules act as success enabling factors. Companies adopt new and productive forms of information storage and communication systems. As companies pursue their economic goals, they are regulated by certain practices, hence, their vision and focus is in line with the set IT standard. As a result of compliance, corporations save expenses that would otherwise be lost through lack of vision or downtime. The visions expand to embrace sustainability through the deployment of products that comply with the developed standards. Greater understanding of national and regional IT standards makes it easy to realize the company vision since it is established within those sets of rules.

According to The British Standards Institution, IT standardization minimizes business risks through easy identification of risks and development of mechanisms to mitigate actual and potential hazards and downtime. Since information technology lays focus on advancing how things are done, errors are minimized. Standards give a guideline on what is expected of corporations thus complex tasks can be handled with ease. Companies develop strategic portfolio aimed at transforming the business by the implementation of effective business models and cultivation of best practices. The internet avails information regarding different companies on which SWOT analysis can be conducted to facilitate avoidance of risk factors. Corporations can also learn from the experiences of other firms.

Moreover, large companies can become more sustainable through IT standardization. It facilitates close examination of resources and energy utilization within an organization. Setting standards that are sensitive to the environment promote cost-saving and boosting of corporation’s reputation. IT promotes communication, information exchange and development of digitalized services and products. The consumers and providers gain awareness on the standards requirement, thus, boosts compliance. Development of homogeneous all-pervasive standards facilitates construction, resource use and connections that are built on the standards guiding large corporations.

Further, big companies become more creative and innovative through IT standardization. Different businesses that are governed by similar rules interact frequently and develop advanced language aimed at driving new advancements. Since new technologies are emerging in the martket rapidly, successful innovation is vital for corporation’s penetration into new markets and gaining of sustainable competitive advantage. The service industry companies give sufficient focus to their processes thus offering quality services. The companies participate actively in developing better means of executing relevant duties.

Finally, IT standardization provides natural ways of solving problems for large corporations. In the face of challenge, the standards act as a reference point regarding the possible solutions. The rules provide guidelines for technical excellence, conducts prior testing and implementation and present short, clear and easily understandable documentation. Also, the experts involved in setting the standards can be consulted in case hardships are encountered during standards implementation. Standardization thus expands networking in corporations operations. It also promotes timelines, fairness and openness in activities. All individuals involved contribute accordingly through the primary communications medium.

Conclusion

In summary, information technology standardization plays an integral and vital role in large corporations. It avails powerful business and marketing tools, decentralizes decision making within corporations and enhances creativity and innovation. In large Corporations, IT Standardization promotes sustainability, minimizes risks, and expands business focus and vision as well as increasing productivity through natural problem solving.

About the Author

Joseph Venturelli earned his degree in design from the School of Visual Arts in New York City. His debut into Information Technology in healthcare began as a system administrator, concentrating on the technical oversight of information systems at Presbyterian Hospital in Charlotte, North Carolina in 1990. Early on Joseph trained and became certified as a system engineer and certified trainer. He has been responsible for leading teams of technologists including infrastructure, web services, data center operations, call centers, disaster recovery planning and help desk services, and has managed scores of system implementations over the past twenty five years..

Joseph co-led the creation and implementation of an electronic medical record system, which included full financial, transcription and scheduling integration. Physicians were given office access to the scheduling and clinical documentation platforms. This integration strategy created immediate medical record completion, eliminating the need for back end inspection and rework resources. Joseph has consistently delivered efficiencies through standardization, recruiting and retaining key talent and launching enthusiastic customer service programs for a variety of professionals, patients and vendors.

Joseph has been published in numerous technical journals and industry magazines and has authored several books including one on patient advocacy. A seasoned executive, Joseph has worked as the Chief Executive Officer for a Southeast Consulting firm, a Chief Information Officer for an Ambulatory Surgery Center company as well as the Chief Information Officer for a Midwest county hospital system and as the Chief Technology Officer for a New England Hospital System.

Recent Comments